For years I’ve used a simple current account to pay my outgoings.

This seems to mainly be the done thing, my salary goes in, I move some out to meet savings obligations and then bills and everyday spending come last. I’ve for a while been aware of stoozing.

Stoozing is a technique of borrowing money interest-free on a credit card and saving it at a high interest rate

MSE

This seems to be the most efficient way to earn on spending compared to cashback schemes and requires about the same administrative overhead,

Cashback can yield up to 5% percent in certain scenarios but they are often introductory schemes and are sometimes capped, with credit arbitrage (stoozing), I have found that the limit is essentially what I can put in a savings account.

Obviously with this scenario you’re playing to their hand, they have cheap credit and in a way it incentivizes spending, maximizing credit ensures a greater return on otherwise idle money, however it comes at the cost of having to pay it back one day.

I’ve strategized this by moving money earmarked for repayment into my easy access savings accounts, for a while I had a dedicated account but realized I could maximize returns just putting it in my highest interest account and marking the liability against a fixed reference, I did this because in my chase saver account interest is paid monthly rather than annually in my Nationwide accounts. Again, something dangerous to do I guess lumping all my liability money in with savings but I like to think I’m smarter than that.

Repayments are also awkward, the direct debit set up on my Barclaycard ensures I’m always current, the Chase repayment is annoyingly manual, I’ve added £100 to a Chase current account (yeah, no interest being earned here) and then set a direct debit up there to act as the repayment account and I’ll try to keep the balance above a month’s repayment obligation.

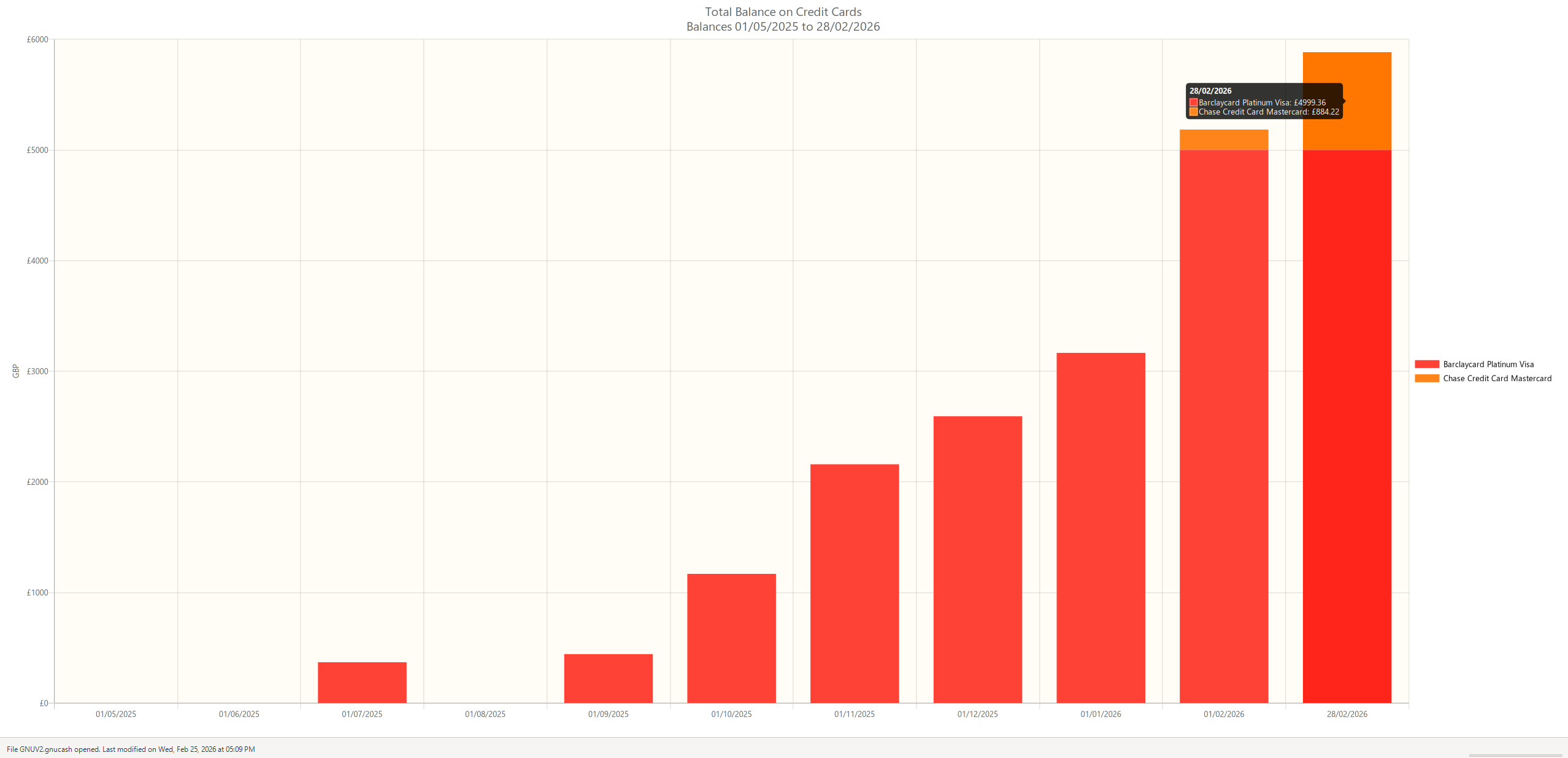

Overall, ChatGPT reckons about £350 expected value after 21 months (the length of my 0% rate unless I move cards), It’s difficult to quantify exactly because the compounding is offset slightly by repayments. I also made a mistake in Croatia and used a firm that took out an actual €1200 rather than place a hold as a deposit, which came with £60’s worth of transaction fees on Barclaycard. When you compare this to cashback, the expected value is well above so I’m reasonably happy.

Another bonus is you can do all your group spending on your credit card and then be repaid in cash, artificially increasing your expenditure.

I guess it would also be prudent to keep an eye on my credit score given all this debt. I’ve used chase as it was built into the app, when I checked at the start and compared it to now, it’s actually increased which I did not expect, but I think once my spending starts to properly get up closer to my credit limit, the scores will start to fall, not sure what to do about that, I’m not really experienced in keeping track of my credit.