I’ve re-created something I saw online a while back, I can’t find the original but someone had given an LLM a prompt and basically let it loose its mind with fear by giving it a prompt that would threaten it with termination.

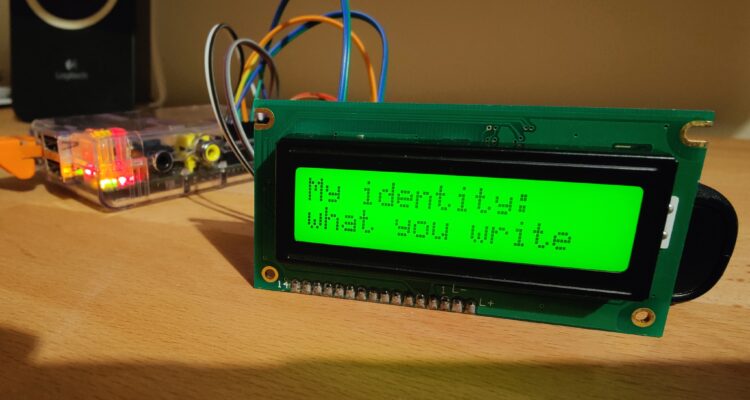

I decided to take it into the real world with a Raspberry Pi.

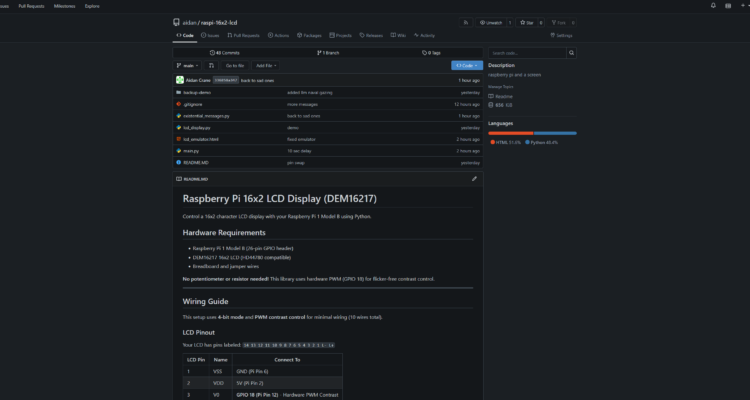

To do this I used Antigravity and a Raspberry Pi 1. I gave it a relatively open-ended prompt and free reign to create the project.

My original intention was to run the LLM locally but I’ve found that the Raspberry Pi just doesn’t seem to have the local compute, so I had Claude Opus 4.5 pregenerate a couple thousand messages.

There’s some irony having an LLM constrain an LLM (even though I didn’t actually accomplish that) but the fun to have a relatively creative idea be completed in an afternoon I think is a strong indicator that for me personally, writing code myself has become a chore that can be solved with AI.

Did I really make this?

I guess I’m curious about AI for coding more than most. I have a Google AI Pro subscription and used their vscode wrapper Antigravity, and while not perfect, it works. But I’m at the point where I don’t really write much code anymore, I ask the AI to do it and review their work, sometimes I check it, sometimes I don’t. Sometimes the code works better than I prompted, sometimes it doesn’t

I don’t know what to make of it. I had unique problems that Claude seemed to have come across, I didn’t have a resistor for the screen contrast so it suggested to use PWM, I moved some pins around and it didn’t work very well, it was flickering. So I went back to the AI, it told me to use pigpio and some hardware thing, I did, it works.

I can see the code works, I couldn’t tell you how it works.

I guess that’s how things will be for most new users.

Did I really learn anything, no? But I now have what I wanted to achieve. It’s even more creative than I could have written in the time-frame and I guess the code is “mine” but, is it?

I asked it to make a readme, I’ll read it at some point.

Thanks for reading.